背景

近期,线上报障了多起Pod删除失败的Case,用户的多次删除请求均已失败告终。Pod删除失败的影响主要有二:

- 面向用户:用户体验下降,且无法对该Pod执行后续的发布流程

- 面向弹性云:失败率提升,SLA无法达标

并且,随着弹性云Docker版本升级 (1.13 → 18.06) 进度的推进,线上出现Pod删除失败的Case隐隐有增多的趋势。

线上问题无小事!不论是从哪个角度出发,我们都应该给线上环境把把脉,看看是哪个系统出了问题。

问题定位

由于线上出Case的频率并不低,基本每周都会出现,这反而给我们定位问题带来了便利^_^。

排查线上问题的思路一般分如下两个步骤:

- 定位出现问题的组件

- 定位组件出现的问题

组件定位

对于弹性云的同学来说,定位问题组件已经有一套标准流程:从上往下,看看问题是由哪个组件引起。【不清楚组件通信流程的同学可以看看容器删除失败排查】

1)第一嫌疑人:kubelet

在kubernetes体系架构下,删除Pod的执行者就是kubelet,作为第一个提审对象,它不冤。

虽然无奈,但是kubelet也早已习惯了,并且在多次经历过社会的毒打之后,练就了一身的甩锅能力:

1 | I0624 11:00:26.658872 21280 kubelet.go:1923] skipping pod synchronization - [PLEG is not healthy: pleg was last seen active 3m0.439656895s ago; threshold is 3m0s] |

什么意思?死贫道不死道友呗。

PLEG模块不健康?PLEG是kubelet的一个子模块单元,用来统一管理底层容器的运行状态。

kubelet招供:我是好人啊,不能冤枉我,都是docker惹的祸!

2)第二嫌疑人:dockerd

根据kubelet的证词,我们很快提审本案的第二嫌疑人:dockerd。

为自证清白,dockerd三下五除二打出一套军体拳:

1 | # docker ps |

该容器恰好属于用户删除失败的Pod。

你们可能没看到当时dockerd的脸色,面如死灰,且一直喃喃自语:难道真是我的锅?

好几分钟后,dockerd才慢慢缓过来,理了理思绪,想好了一套甩锅流程:虽然问题出现在我这,但是你们的证据不足,不能证明是我亲手干的。我手下养着一大帮人,可能是一些小弟自己偷偷干的。

嘿,你还有理了,那好,我继续收集证据,让你死的明明白白。

3)第三嫌疑人:containerd

作为dockerd手下的二当家,我们首先传讯了containerd。这人一看就老实忠厚,它看着dockerd的证词,苦笑了下,吐槽到:跟着大哥这么多年,还是没有得到大哥的信任(所以kubernetes在1.20版本中,将containerd扶上了大哥的位置?哈哈)。

containerd有条不紊的祭出三板斧:

1 | # docker-containerd-ctr -a /var/run/docker/containerd/docker-containerd.sock -n moby c ls |

containerd神色不自然的说:不好意思啊,警官,可能是我家里不争气的孩子惹的祸,这孩子以前也犯过事【docker hang 死排查】。

4)第四嫌疑人:containerd-shim

containerd的话还没说完,待在一旁的儿子containerd-shim跳出来指着containerd叛逆地说:你凭什么说是我?你自己干的那些破事,我都不稀罕说你。

清官难断家务事!案件排查至此,从已知证据,还真不好确认到底是老子,还是儿子犯的罪。

5)第五嫌疑人:runc

万般无奈,我们清楚了本案的最后一个嫌疑人,也是年纪最小的runc。runc只会呀呀自语地说:不是我,不是我!

1 | # docker-runc --root /var/run/docker/runtime-runc/moby/ list |

从现场收集到的证据表明,这个案件和runc还真没什么关系,案发时,它已经离开了现场,只不过留下了一个烂摊子等着别人来清理。

从众人招供的语录来看,案件嫌疑人初步锁定了containerd与containerd-shim,但是具体是谁,都还不好说。

正当大家一筹莫展之际,一位老刑警从现场提取到了一些新的线索,案件终于有了新的进展。

6)新线索

老刑警领着大家观察它收集到的新线索:

1 | # sudo docker ps -a | grep PODNAME |

同一个Pod内pause容器依然退出,但是业务容器却没有退出,并且业务容器关联的containerd-shim进程并未执行子进程收割动作,就像是卡住了。

面对这些新证据,containerd-shim毫无征兆地崩溃了,大哭道:为什么总是我?

问题定位

言归正传,我们如何能根据上述现象快速定位问题呢?思路有三:

- 拿着现象问谷歌

- 带着问题看代码

- 深挖现场定问题

1)谷歌大法

当我们拿着问题呈现的现象搜索谷歌时,还真搜到了关联的内容:Exec process may cause shim hang。

该issue中所描述的内容和我们碰到的问题基本一致。问题是由于 reaper.Default 处定义的channel大小太小引起的,调整channel大小可规避该问题。

2)理解代码

尽管本问题可以通过一些手段规避,但是我们还是需要理解代码中出现的问题。

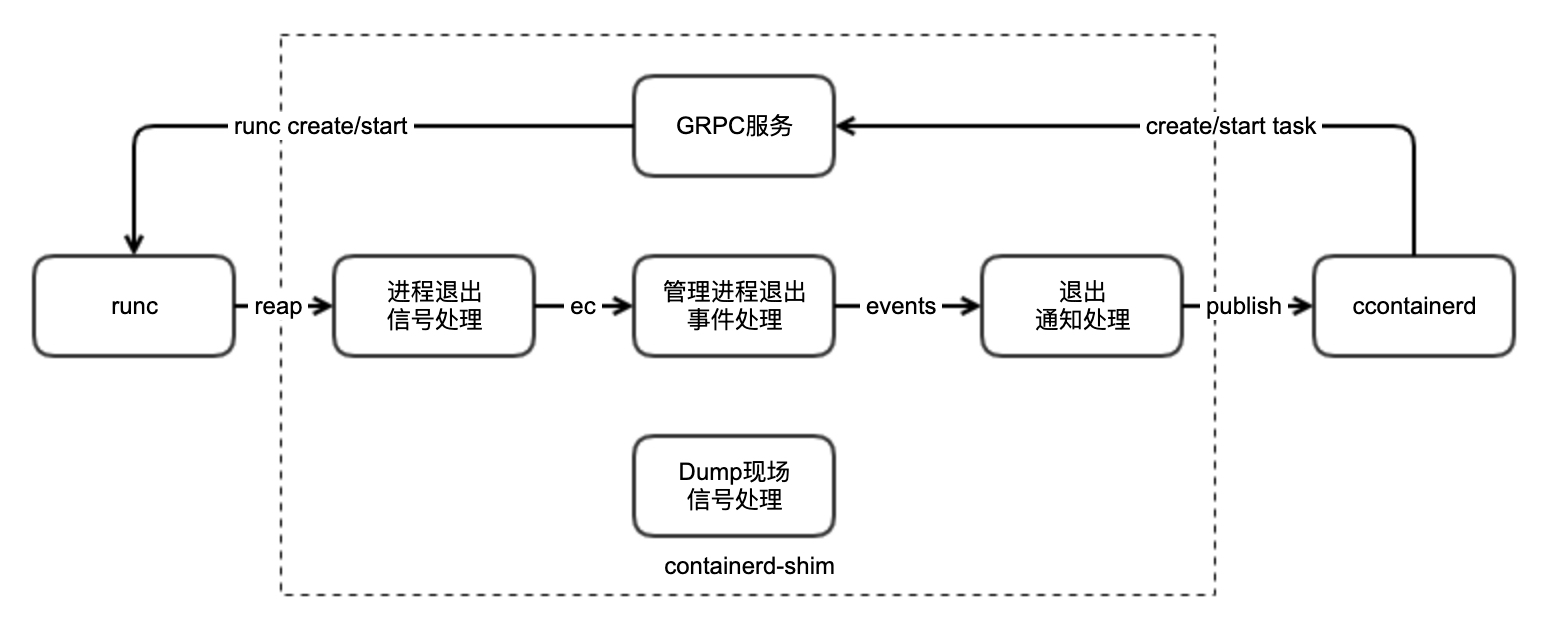

containerd-shim的主要事务是执行runc命令,主要功能是托管真正的容器进程,并暴露一个服务,供外部用户与容器进行交互。

containerd-shim内部处理逻辑如下图所示:

GRPC服务:containerd-shim的核心服务,对外暴露众多接口,诸如创建/启动task等,并调用runc执行对应的命令。

此外,containerd-shim内启动了三个协程(包含主协程)共同处理容器内进程退出事件。首先是主协程handleSignals:

1 | func handleSignals(logger *logrus.Entry, signals chan os.Signal, server *ttrpc.Server, sv *shim.Service) error { |

containerd-shim调用system.SetSubreaper将自己作为容器内进程的收割者,一般容器内的1号进程也具备收割僵尸进程的能力,因此containerd-shim更多的是收割runc exec进容器内的进程。

当有僵尸进程出现时,就执行收割逻辑:

1 | func Reap() error { |

那么谁又是订阅者呢?shim在初始化时就订阅了一份进程退出事件:

1 | func NewService(config Config, publisher events.Publisher) (*Service, error) { |

其中退出事件处理逻辑如下:

1 | func (s *Service) processExits() { |

只处理s.processes的退出事件,而s.processes关联的都是什么对象呢?主要有二:

1 | // Create a new initial process and container with the underlying OCI runtime |

代码就展示这些,其他代码大家感兴趣的自己查阅。

现在,我们再来根据现象查问题。从现象可知,异常容器待收割的僵尸进程较多,肯定超过了32个。当shim在收割众多僵尸进程时,往订阅者信道(大小32)中发送时,出现阻塞,阻塞点:阻塞信号,并且此时持有Default.Lock这一把大锁。

那么只要这时候再有人来申请这把锁,就会形成死锁。

那么究竟谁会来申请这把锁呢?这时候,要是能查看containerd-shim的协程栈就好了。

3)现场分析

确实,containerd-shim启动了一个协程方便用户导出协程栈信息,我们来看看能不能行呢?

1 | func executeShim() error { |

一切看起来貌似没什么问题,我们发送SIGUSR1就能获取一份协程栈。

当我们真正去执行时,却发现到处了一个寂寞。根本原因在于:

- logger.Infof()往os.Stdout输出

- 由于线上环境docker没有开启

debug模式,线上containerd-shim的os.Stdout被赋值为/dev/null

1 | COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME |

问题排查至此,似乎又僵住了!好在,之前看过一丢丢内核问题排查相关知识。虽然线上containerd-shim将协程栈的信息全部导出到了/dev/null中,但是我们还是有一些手段获取。

赶紧找组里的内核大佬帮忙,并很快确定了方案,基于kprobe,在操作系统往/dev/null设备写入协程栈时,拷贝一份内容写到内核日志中。方案实施起来也不复杂:

1 |

|

这里着重感谢睿哥提供的kprobe代码。kprobe的相关知识,感兴趣的自己学习下。

我们在线上部署了该kprobe内核模块之后,往containerd-shim发送SIGUSR1顺利获取了协程栈信息。终于补全了问题排查的最后一块拼盘。

补:这里重点感谢飞哥(不愧是老中医)的提醒,其实还有一个非常简单的方法获取协程栈:借助strace跟踪进程的系统调用。

线上containerd-shim的协程栈信息(删减了大量不重要协程栈,并调整了格式)展示如下:

1 | time="2021-06-23T16:35:07+08:00" level=info msg="=== BEGIN goroutine stack dump === |

分析以上协程栈可知:

- goroutine 1:

handleSignals确实阻塞在reaper.go:44,导致后续进程无法被收割 - goroutine 18:

checkProcesses阻塞在service.go:470,获取锁失败,但是并非是reaper.Default这把大锁 - goroutine 22661144:

shim.(*Service).Kill阻塞在reaper.go:82

其中,最为异常的是goroutine 22661144,其阻塞点代码如下:

1 | func (m *Monitor) Wait(c *exec.Cmd, ec chan runc.Exit) (int, error) { |

其中ec就是reaper.Default的一个订阅方。

死锁的形成如下:

- goroutine 22661144:等待着关联进程的退出事件到来,并且持有

Service.mu这把锁 - goroutine 18:等待获取

Service.mu这把锁后,再去处理订阅的事件 - goroutine 1:往所有订阅方发送事件

这三个协程形成了完美的死锁现场。

解决方案

清楚了问题的成因之后,解决问题的方案也很简单,只需调整默认的订阅者信道大小即可。社区优化方案有二:

- 调整信道大小,溢出事件自动忽略:https://github.com/containerd/containerd/pull/2748/files

- 优化锁逻辑:https://github.com/containerd/containerd/pull/2743 【好几个commit】

但是,当我们替换containerd-shim后,影响的也仅是在此之后创建的容器,而之前创建的容器仍然会出现该问题。

这个可以通过添加告警自愈的手段解决:直接杀containerd-shim进程。这样所有的进程都会由init进程完成收割。